bcrowell.github.io

Psychopathic AI: a call to arms

Our society has reached a tipping point with artificial intelligence. The harm is skyrocketing, and the claimed benefits are mostly snake oil. In this blog post, I describe the real-world harm being caused by four different AI systems, all in the pursuit of profit and working in shady areas of the law.

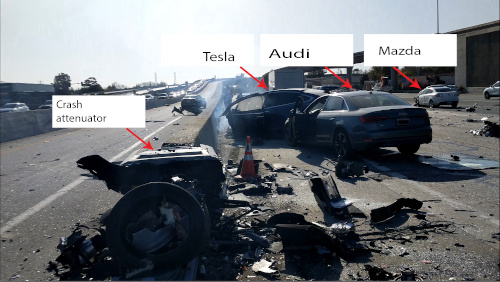

Accident scene caused by a Tesla on autopilot, Mountain View, 2018.

The psychopathic poster child of this historical moment is Elon Musk. He’s fraudulently and illegally marketed a $15,000 feature that falsely claims to add “full self-driving” to a Tesla. In a 2019 crash, a Tesla on autopilot barreled down a freeway off-ramp, ran a red light, and killed two people in a Honda. The car’s owner is being tried for manslaughter, but the real criminal is Musk, who has told drivers that they can take their hands off the wheel and stop paying attention.

In 2021, Tesla stopped releasing data from accidents caused by their system, which is a beta test being conducted on human guinea pigs. There are other systems using better sensors that are much more reliable, but Musk doesn’t want to use them, because they’d take about $500 off of his bottom line. It’s a race to the bottom, and Tesla plans to win.

Musk is also one of the founders of OpenAI, which makes the chatbot ChatGPT and the art pastiche system DALL-E. Continuing the same snake oil pattern, OpenAI encourages naive people to believe that these systems are both smarter and more reliable than they are. Both of these systems also have the potential for serious social harm.

Many people using ChatGPT don’t realize that it basically produces fluent nonsense. But as with the Tesla autopilot system, users are fooled by the system’s behavior into thinking that it works better than it does. At least the autopilot is usually accurate. ChatGPT simply doesn’t care. A librarian writes:

Been seeing a lot about #ChatGPT lately and got my first question at the library this week from someone who was looking for a book that the bot had recommended. They couldn’t find it in our catalog. Turns out that ALL the books that ChatGPT had recommended for their topic were non-existent. Just real authors and fake titles cobbled together. And apparently this is known behavior.

Many users seem to believe that the system is just in its early stages and will get more truthful in the future, but AI expert Gary Marcus says that the technique it uses is one where with further training it becomes “more fluent but no more trustworthy.”

An even bigger problem is that you don’t necessarily know when you’re interacting with ChatGPT. A philosophy professor at Furman was mystified at first when he read a take-home exam paper generated by ChatGPT. At first he just noticed that “…it was beautifully written. Well, beautifully for a college take home exam, anyway.” But “despite the syntactic coherence …, it made no sense.” Personally, when I run into people online who seem to be spouting nonsense about science, I now feel like I should probably cut and paste their text into OpenAI’s https://openai-openai-detector.hf.space/ that is supposed to detect whether it produced it.

This is clearly unsustainable. The problem is that human beings have limited time available for these games, but the machines never get tired. Programming Q&A site StackExchange has been forced into a battle against wrong but well-written ChatGPT answers: “[The] volume of these answers (thousands) and the fact that the answers often require a detailed read by someone with at least some subject matter expertise in order to determine that the answer is actually bad has effectively swamped our volunteer-based quality curation infrastructure.”

Even worse is the capacity for what’s known as coordinated inauthentic behavior. Imagine a congressional representative on the receiving end of a letter-writing campaign in which all the letters actually came from an AI.

The visual art systems have a whole different set of issues. Their inputs are copyrighted photos and art that have been webscraped. Says technology journalist Andy Baio, “The academic researchers took the data, laundered it, and it was used by commercial companies.” There are millions and millions of selfies in there, which can now be used for any purpose. There is also a vast amount of artwork that is done in the artist’s own carefully cultivated style, which can now be reproduced cheaply in vast quantities. Artist Hollie Mengert says, “For me, personally, it feels like someone’s taking work that I’ve done, you know, things that I’ve learned — I’ve been a working artist since I graduated art school in 2011 — and is using it to create art that that I didn’t consent to and didn’t give permission for.”

And now Microsoft is jumping in with software called VALL-E that is supposed to be able to imitate anyone’s voice based on a three-second sample. There are obvious applications. A Chinese political dissident gets a call from her mother, but it’s actually the police. A woman who has escaped an abusive relationship gets a voice mail that she thinks is from an old friend. Deepfake pornography can now use not just Gal Gadot’s face but also her voice. (The video portion of these deepfakes is now apparently really easy to do on a normal PC.) And as with DALL-E, there is a misuse of people’s personal data as the “seed” for the algorithm. The fakes are based on approximate matches to voices in the database used by Microsoft, which is mostly people who recorded auiobooks. You thought you were helping blind people access Alice Walker, but actually you helped a stalker track down his ex. Thanks, Microsoft!

I don’t know about you, but this seems like a loathsome take on what the future should be. I’m not normally a big fan of government regulation, but it’s necessary here. California has taken a step in the right direction with SB 1398, which makes it more practical to go after Tesla for its misleading advertising.

Ben Crowell, 2023 Jan. 16

This post is CC-BY-ND licensed.

Photo credits

Tesla crash scene - NTSB, public domain - https://en.wikipedia.org/wiki/File:Mtn_view_tesla_scene_graphic_(28773524958).jpg